The rapid development of artificial intelligence (AI) has sparked intense debates about its implications, particularly concerning the emulation of human behavior and decision-making. As we advance further into the realm of AI that can replicate, simulate, or enhance human traits, various ethical considerations arise. This article aims to explore common questions surrounding the ethical challenges posed by AI and human emulation.

What are the main ethical concerns associated with AI emulating human behavior?

Several ethical concerns emerge when AI systems attempt to emulate human behavior. These include:

- Dehumanization: There is a risk that as machines become more human-like, society may begin to devalue genuine human interaction, leading to potential isolation and social disconnection.

- Manipulation: AI systems can be programmed to exploit human emotions, which raises concerns about the potential for manipulation in fields like marketing or politics.

- Accountability: Determining who is responsible when AI systems make errors or harmful decisions requires careful consideration.

- Bias and Fairness: AI systems often reflect the biases present in their training data, which can lead to discriminatory outcomes that mimic or even exacerbate existing societal biases.

Can AI truly understand human emotions?

While AI can be programmed to recognize and respond to human emotions through techniques such as natural language processing and facial recognition, it does not experience emotions in the same way humans do. AI’s understanding is purely computational and lacks subjective consciousness. Ethical implications arise from misleading users into believing that an AI has genuine empathy or emotional understanding.

What measures can be taken to ensure ethical AI development?

To support ethical AI development, several measures can be considered:

- Transparency: Developers should disclose and ensure that users understand how AI systems function, including their limitations and biases.

- Inclusion: Engaging diverse perspectives in the development process can help mitigate unconscious biases and promote fairer outcomes.

- Regulation: Government regulations and ethical frameworks can provide guidelines for safe AI usage and reduce potential harm.

- Continuous Review: Ongoing assessments of AI systems should be conducted to identify and rectify ethical issues as they evolve.

How does human emulation in AI impact employment?

The emulation of human skills by AI can substantially impact employment. While AI can enhance productivity and efficiency in various sectors, it may also lead to job displacement, particularly for roles that involve routine and repetitive tasks. Ethical considerations should be centered around how to manage workforce transitions and ensure that displaced workers have access to retraining opportunities.

What role does consent play in AI emulating human behavior?

Consent is a critical factor in ethical AI practices, especially when systems mimic human behaviors or characteristics. Users should be informed when they are interacting with AI and must have the option to consent to such interactions. Additionally, data privacy is essential; individuals must consent to their data being used for AI training and development.

What potential benefits can arise from ethically sound AI human emulation?

When developed ethically, AI that emulates human behavior can offer several advantages, such as:

- Improved Accessibility: AI can assist individuals with disabilities by providing enhanced communication methods or physical assistance.

- Personalized Education: AI tutors can adapt to individual learning styles, improving educational outcomes for students.

- Enhanced Emotional Support: AI companions can provide support for mental health, offering a non-judgmental space for individuals to express themselves.

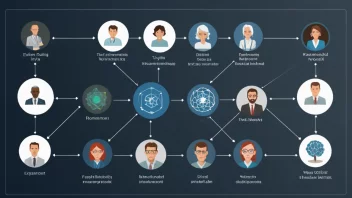

Final Thoughts: The ethical considerations surrounding AI and human emulation are intricate and multifaceted. As we integrate AI into daily life, it is essential to address these ethical dilemmas thoughtfully. Stakeholders—developers, policymakers, and the public—must work collaboratively to create an ethical framework that safeguards human dignity while embracing technological advancements. The aim should be to enhance human capability rather than replace it, fostering a future where AI serves to support and enrich human experience rather than diminish it.